PDF) Inter-rater agreement in judging errors in diagnostic reasoning | Memoona Hasnain and Hirotaka Onishi - Academia.edu

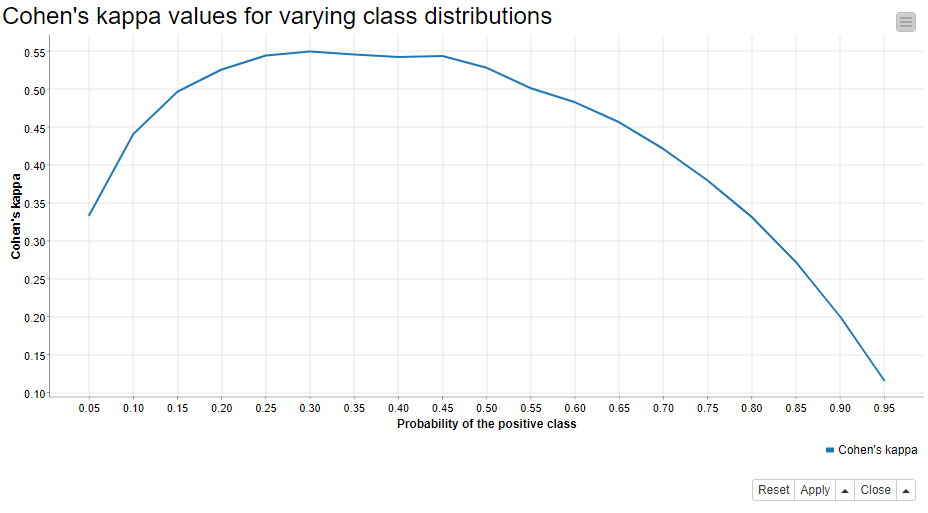

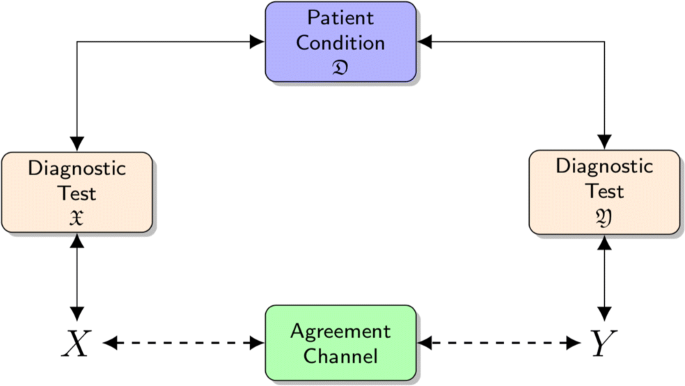

Beyond kappa: an informational index for diagnostic agreement in dichotomous and multivalue ordered-categorical ratings | SpringerLink

An Evaluation of Interrater Reliability Measures on Binary Tasks Using <i>d-Prime</i>. - Abstract - Europe PMC

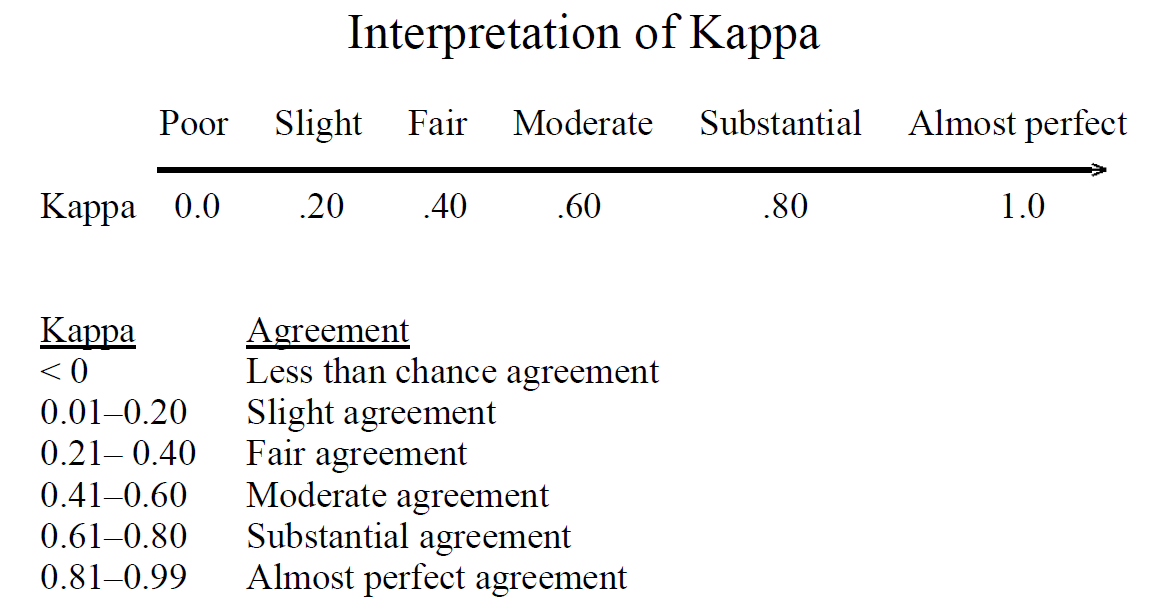

Inter-Annotator Agreement (IAA). Pair-wise Cohen kappa and group Fleiss'… | by Louis de Bruijn | Towards Data Science

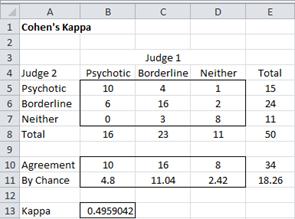

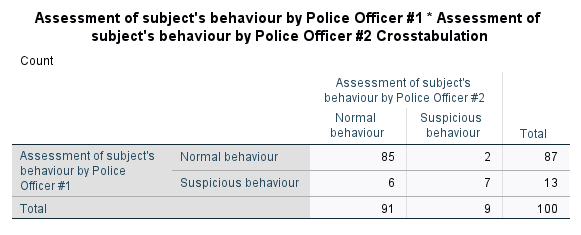

Cohen's kappa in SPSS Statistics - Procedure, output and interpretation of the output using a relevant example | Laerd Statistics